Using the BETA Fluent API to import blog posts from an RSS feed

The content you're reading is getting on in years

This post is on the older side and its content may be out of date.

Be sure to visit our blogs homepage for our latest news, updates and information.

As a side project, I’m experimenting with moving Sitefinity Watch to the Sitefinity 4.0 BETA. As of this writing, there are no migration tools that can be used to migrate blog posts from Sitefinity 3.x to Sitefinity 4.0. We will eventually deliver these migration tools, but this project gave me an excuse to experiment with using Sitefinity 4.0’s Fluent API to import content.

Ivan Osmak highlighted the Fluent API in our Sitefinity 4.0 BETA webinar. The Fluent API enables .NET developers to query and manipulate Sitefinity data in very powerful ways.

Disclaimer: What I’m going to share in this blog post needs a lot of work. I’m not yet done with it. Later I’ll explain the issues I still need to solve. However, rather than sit on this code for weeks, I wanted to share what I have working. Later I can iterate. Alternately, if someone wants to steal this code and extend it…feel free. I will gladly link to your blog posts and credit your work.

My Very Basic Goal

As a first step, I simply wanted to point to an RSS feed and import these RSS items into a Sitefinity 4.0 blog. This obviously isn’t a full fledged import utility, but it’s a reasonable first step.

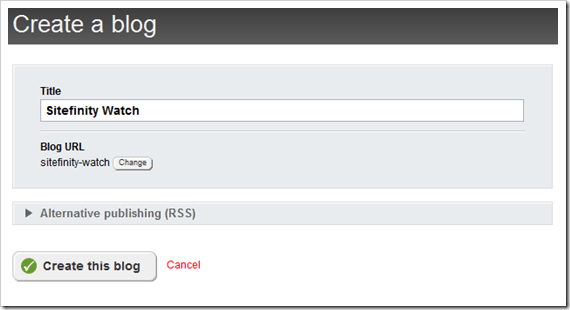

Step 1: Create a Blog

Before importing blog posts, I needed to first create a new blog in Sitefinity 4.0.

This was the easy part.

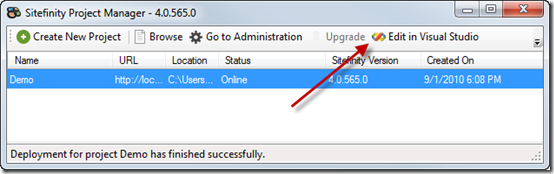

Step 2: Open and prepare the Sitefinity project

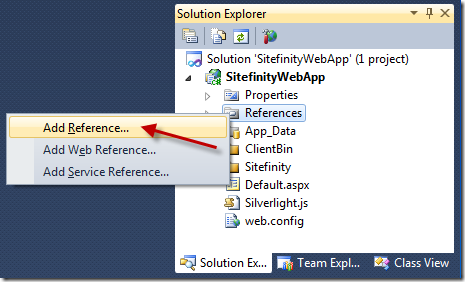

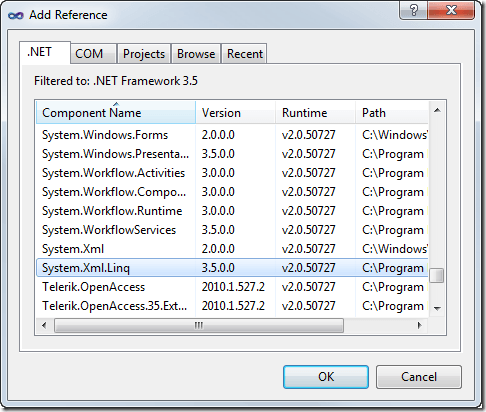

There are some assembly references that needed added before custom code can be added to a Sitefinity project. This instructions (and links) below describe how to prepare a Sitefinity project for custom development.

1. Open the Sitefinity project in Visual Studio

2. Add the missing assembly references. <- Important!!

3. Add an assembly reference to System.Linq.Xml

The project is now prepared for custom development.

Step 3: Create a Blog Import ASPX page

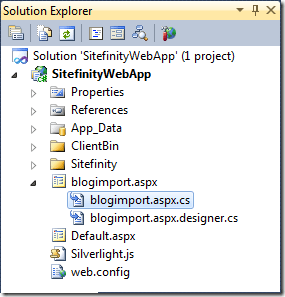

To import blog posts, I needed to run a small bit of code within the context of my Sitefinity web site. There are various strategies for doing this (for example, I could create a custom UserControl or use Sitefinity Services), but I chose to create a new ~/blogimport.aspx page in my Sitefinity web site.

The instructions below describe how to create this ASPX page:

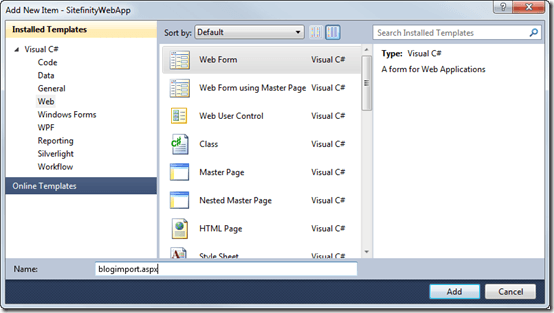

1. Right-click the SitefinityWebApp project in the Solution Explorer and click New Item

2. Select Web Form and type blogimport.aspx for the Name

3. Open the code-behind file for the blogimport.aspx page

Step 4: Create the code

The code for fetching the RSS items and importing these items into Sitefinity (using the Fluent API) is posted below. Comments have been added throughout the code to explain what is happening.

~/blogimport.aspx.cs

using System;

using System.Linq;

using Telerik.Sitefinity;

using System.Xml.Linq;

namespace SitefinityWebApp

{

public partial class blogimport : System.Web.UI.Page

{

protected void Page_Load(object sender, EventArgs e)

{

// Get the RSS feed from Sitefinity Watch

var blogRSS = XDocument.Load("http://feeds.feedburner.com/SitefinityWatch");

// Get the RSS items from the feed

var rssItems = from d in blogRSS.Descendants("item")

select d;

// If the executing code isn't wrapped in a using, then changes aren't committed

using (var fluent = App.WorkWith())

{

// Get the blog object associated with my Sitefinity Watch blog

var blog = (from b in fluent.Blogs().Get()

where b.Title == "Sitefinity Watch"

select b).FirstOrDefault();

// Print the ID, to confirm I got it

Response.Write(blog.Id.ToString() + "<br />");

// Loop through each RSS item

foreach (var item in rssItems.ToList())

{

// Print the Title for each blog post

Response.Write(item.Element("title").Value + "<br />");

// Add the blog post from the RSS feed to my Sitefinity 4.0 blog

blog.BlogPosts.Add(

fluent.BlogPost()

.CreateNew()

.Do(b =>

{

b.Title = item.Element("title").Value;

b.Content = item.Element("description").Value;

b.PublicationDate = DateTime.Now;

b.ExpirationDate = DateTime.Now.AddDays(10);

})

.Get()

);

}

}

}

}

} Step 5: Import the RSS blog posts

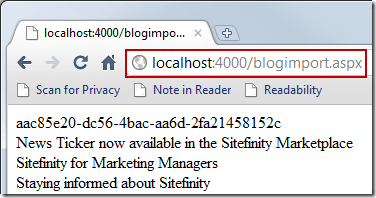

The blog posts found in the RSS feed can be imported by accessing the import page in a web browser. Because the Sitefinity Fluent API is bound by Sitefinity permissions you must be authenticated before accessing the page.

1. Login to Sitefinity

2. Access the ~/blogimport.aspx page in a web browser.

3. View the blog in Sitefinity to confirm the blog posts were imported.

Some parting words

This is a nice first step. However, this is not a fully developed import process. The technique described above has several challenges associated with it.

For example:

- Old URLs are not preserved

- Images associated with the blog posts aren’t imported

- Only blog posts found in the RSS feed (15 posts) are imported

These are challenges that I still need to work through. In the meantime, this might be useful to anyone who is experimenting with Sitefinity’s Fluent API.

Thanks to Ivan Dimitrov and Robert Shoemate for helping me with this code.

The Progress Team

View all posts from The Progress Team on the Progress blog. Connect with us about all things application development and deployment, data integration and digital business.

Comments

Topics

- Application Development

- Mobility

- Digital Experience

- Company and Community

- Data Platform

- Secure File Transfer

- Infrastructure Management

Sitefinity Training and Certification Now Available.

Let our experts teach you how to use Sitefinity's best-in-class features to deliver compelling digital experiences.

Learn MoreMore From Progress

Latest Stories

in Your Inbox

Subscribe to get all the news, info and tutorials you need to build better business apps and sites

Progress collects the Personal Information set out in our Privacy Policy and the Supplemental Privacy notice for residents of California and other US States and uses it for the purposes stated in that policy.

You can also ask us not to share your Personal Information to third parties here: Do Not Sell or Share My Info

We see that you have already chosen to receive marketing materials from us. If you wish to change this at any time you may do so by clicking here.

Thank you for your continued interest in Progress. Based on either your previous activity on our websites or our ongoing relationship, we will keep you updated on our products, solutions, services, company news and events. If you decide that you want to be removed from our mailing lists at any time, you can change your contact preferences by clicking here.